Mayank Patel

Aug 20, 2025

5 min read

Last updated Aug 20, 2025

Customers hop between storefronts, apps, marketplaces, and in-store screens; and they expect the story about your products to match everywhere. The only way to meet that bar is to store content as clean, structured data and serve it to whatever front end needs it. That’s the job of content modeling.

This guide walks through a practical “101” for modeling content in dotCMS. You’ll learn how to define types, fields, and relationships that reflect your business, keep presentation concerns out of the CMS, enable localization and multi-site, and enforce quality with workflow.

Omnichannel content delivery means your messaging remains consistent across every channel, while also optimized for the context of each channel. In an omnichannel retail example, a customer might discover a product on a marketplace, check details on your website, receive a promotion via email or SMS, and finally buy in-store.

Traditional web-oriented CMS platforms often struggle here. They were built to push content into tightly-coupled webpages, not to serve as a hub for many diverse outputs. Many legacy CMSs lack the flexibility to easily repurpose content for mobile apps, IoT devices, or third-party platforms, and their rigid page-centric models make omnichannel consistency hard to maintain.

In contrast, modern headless and hybrid CMS solutions promise to meet omnichannel needs. Headless CMS decouples the content repository from any specific delivery channel, exposing content via APIs. This decoupling gives organizations the agility to present content on any channel from a unified backend.

However, pure headless systems sometimes sacrifice the user-friendly authoring experience that content teams desire. This is where hybrid CMS platforms like dotCMS.com shine. They combine the flexibility of headless architecture with tools for easy authoring and preview. dotCMS enables a true “create once, publish everywhere” paradigm, which drives omnichannel content distribution, reduces duplicate work, and speeds up time-to-market.

What is Content Modeling? (And Why It Matters for Omnichannel)

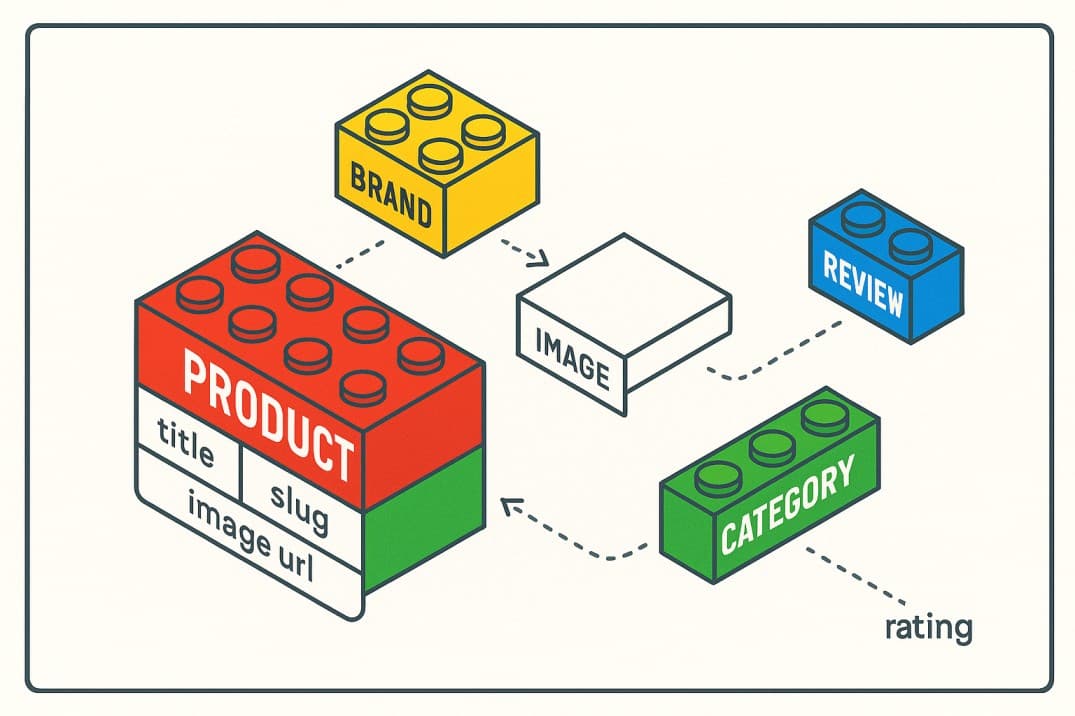

Content modeling is the process of defining a structured blueprint for your content; identifying what types of content you have, what fields or elements make up each type, and how those pieces relate to each other. Instead of treating a piece of content as a big blob of text (like a lengthy web page), you break it down into meaningful chunks: titles, descriptions, images, metadata, categories, and so on.

For example, a Product content type might include fields for product name, description, price, images, specifications, and related products or categories. A Blog Article content type might include title, author, publish date, body text, and tags. When content is neatly structured and stored, you can present it on a website in one format, in a mobile app with a different layout, or even feed it into a chatbot or voice assistant; all without duplicating content or manual copy-paste.

dotCMS is an enterprise-grade content management system built with omnichannel needs in mind. It advertises itself as a "Hybrid Headless" or "Universal CMS", meaning it blends the API-first flexibility of a headless CMS with the ease-of-use of a traditional CMS for editors. From a content modeling

perspective, dotCMS provides a rich toolset to define and manage your content structure without heavy development effort.

Here are some of the dotCMS features to understand:

In dotCMS, you can define unlimited content types to represent each kind of content in your business (products, articles, events, locations, customer testimonials, etc.). Each content type has fields (text, rich text, images, dates, geolocation, etc.) that you configure. Importantly, all content types are completely customizable through a no-code interface; you don’t need a developer to add a new field or change a content model. Content in dotCMS is stored in a central repository and structured by these content types, rather than as unstructured blobs.

dotCMS treats content as data. A single piece of content (say a Product or an Article) lives in one place in the CMS but can be referenced or pulled into any number of front-end presentations. Authors can create content once and then use dotCMS’s features to share it across pages, sites, and channels effortlessly. For example, you might have one canonical product entry that is displayed on your public website, inside your mobile app, and on an in-store screen, all fed from the same content item.

dotCMS provides no-code tools for tagging and categorizing content, as well as defining relationships between content types. For instance, you can relate an “Author” content item to a “Blog Post” item to model a one-to-many relationship, or relate “Products” to “Categories”. These relationships make it possible to build rich, dynamic experiences (e.g., listing all articles by a certain author, or showing related products in the same category). They also improve content discovery and personalization. The ability to create and manage these relations through a friendly UI means your content model can truly reflect the reality of your business (how things connect) without custom development.

dotCMS was built with multi-channel delivery in mind. It allows you to create content once and deliver it anywhere via APIs. Under the hood, dotCMS offers both REST and GraphQL APIs to retrieve content, so your front-end applications (website, mobile app, IoT device, etc.) can query the content they need. The content model you define is enforced across all channels..

One standout feature of dotCMS is its Universal Editing capabilities for content creators. Even though content may be delivered headlessly, dotCMS provides content teams with a visual editing and preview experience that works across different channel outputs. For example, the dotCMS Universal View Editor allows authors to assemble and preview content as it might appear on various devices or channels, all within the CMS interface. This means marketers can, say, adjust a landing page and see how it will look on a desktop site, a mobile app, or other contexts without needing separate systems.

Large enterprises often serve multiple websites, brands, or regions, each a "channel" of its own. dotCMS supports multi-tenant content management, meaning you can run multiple sites or digital experiences from one dotCMS instance, reusing content where appropriate and varying it where needed. For example, you might have a global site and several country-specific sites; with dotCMS, you can share the core content model and even specific content items across them, while still allowing localization and differences where necessary. This feature amplifies content reusability for omnichannel because not only are you delivering to different device channels, but also to different sites/audiences from the same content hub.

A true omnichannel content strategy rarely lives in a vacuum; it often needs to integrate with other systems (e.g., e-commerce platforms, CRMs, personalization engines, mobile apps). dotCMS is API-first and integrates effortlessly with any tech stack via REST, GraphQL, webhooks, and plugins. This openness means your content model in dotCMS can be the central content service not just for your own channels, but it can feed into other applications as well. For instance, if you have a separate mobile app or a voice assistant platform, they can pull content from dotCMS. If you use a third-party search engine or commerce engine, dotCMS can connect to it. The ability to plug into a composable architecture is important; dotCMS is often deployed alongside best-of-breed solutions (for example, integrating with e-commerce engines like Commercetools, Fynd etc. is supported out-of-the-box).

(dotCMS for content · MedusaJS for commerce · Fynd for channel sync)

TL;DR: Use dotCMS as your single source of truth for content, MedusaJS as the headless commerce engine, and Fynd to unify online/offline channels. Each does what it’s best at; together they deliver one seamless customer experience.

Why this matters (for CTOs)

Omnichannel isn’t just “show the same thing everywhere.” It’s a promise that product info, branding, and availability stay consistent across web, app, kiosk, marketplace, and POS without your teams duplicating work. The cleanest way to keep that promise is a composable stack where each system does one job extremely well and everything talks over APIs.

Start with dotCMS as the content brain. Treat product copy, specs, imagery, promos, and SEO as structured content modeled once, governed once, and delivered everywhere through REST or GraphQL. Editors get a friendly, hybrid authoring experience; engineers get predictable schemas and stable APIs. Because content is cleanly separated from presentation, you can render it in any UX—from a React website to a kiosk UI—without rewriting the source.

Pair that with MedusaJS on the commerce side. MedusaJS is a headless Node.js engine for catalogs, variants, pricing, carts, checkout, orders, and payments. It doesn’t prescribe a front end and plays nicely with webhooks and plugins. Think of it as the transactional core that your UIs (and channel tools) can query for the real-time bits: price, stock, and order state.

Now widen the lens with Fynd to unify online and offline channels. Fynd syncs inventory and orders across marketplaces and in-store systems, so the pair of shoes shown on your site matches what’s available at the mall and what’s listed on third-party marketplaces. When Fynd needs rich product content: names, descriptions, images, feature bullets, it can pull that from dotCMS.

Here’s how a typical flow feels. Your content team creates or updates a Product entry in dotCMS (title, short/long descriptions, hero image, spec table, locale variants). Front ends request that content via GraphQL and render it alongside live commerce data from MedusaJS (price, stock by variant). Fynd consumes the same product content from dotCMS and the same inventory signals from MedusaJS to populate marketplaces and POS.

Content modeling is the linchpin. Define types like Product, Category, Brand, Promo, and Store with clear relationships (Product↔Category, Product↔Promo). Add channel-aware fields: short descriptions for mobile cards, alt text for accessibility, locale fields for multi-region delivery. Wrap it all with dotCMS workflows so high-impact edits are reviewed before they propagate to every channel. The result is “create once, deliver everywhere” with actual guardrails.

Today, a CTO’s goal should be to avoid monolithic systems that try to do everything and instead orchestrate best-of-breed platforms. By modeling your content well in dotCMS and integrating it with your commerce and channel platforms, you achieve the coveted omnichannel experience: the customer gets a unified journey, and your internal teams get maintainable, specialized systems.

To ensure we “cover everything,” let’s distill some practical best practices for content modeling in dotCMS, especially geared toward omnichannel readiness:

Begin by listing the types of content your business uses (or will use) across channels. Typical domains include products, articles, landing pages, promotions, user profiles, store locations, FAQs, etc. Don’t forget content that might be unique to certain channels (for example, push notification messages or chatbot prompts). Having a comprehensive view prevents surprises later.

For each content type, define the fields it needs. Ensure each field represents a single piece of information (e.g. separate fields for title, subtitle, body text, instead of one blob). Determine which fields are required, which are multi-valued (like multiple images or tags), and use appropriate field types (dates, numbers, boolean, etc., not just text for everything). In dotCMS, setting this up is straightforward via the Content Type builder. Keep future channels in mind: for instance, if you might need voice assistants to read out product info, having a short summary field could be useful. Example: A News Article content type might include fields for Headline, Summary, Body, Author (related content), Publish Date, Thumbnail Image, and Tags. This way, a mobile news feed can use the Headline and Summary, whereas the full website uses all fields.

Organize your content using categories, tags, or folders offered by dotCMS. Taxonomy is hugely helpful for dynamic content delivery, e.g., pulling “all articles tagged with X for the mobile app home screen” or “all products in category Y for this landing page.” Define a tagging scheme or category hierarchy that makes sense for your domain. dotCMS allows tagging content items and using those tags to assemble content lists without coding. Consistent taxonomy also aids personalization (showing content by user interest) and SEO.

A key headless content modeling principle is to separate content from design. In dotCMS, avoid embedding HTML/CSS styling or device-specific details in your content fields. For example, use plain text fields and let your front-end apply the styling. This keeps the content truly channel-agnostic. If you need variations of content for different channels (like a shorter title for mobile), model that explicitly (e.g., a field “Mobile Title” separate from “Desktop Title”) rather than overloading one field with both.

If you operate in multiple locales or brands, design your content model to accommodate that. dotCMS has multilingual support and multi-site features. Decide which content types will need translation or variation by locale. dotCMS can manage translations of content items side-by-side. Structuring your content well (and not hard-coding any language-specific text in fields) will pay off when you need to roll out in another language or region. Similarly, if running multiple sites, plan which content types are shared globally and which are specific to a site. dotCMS’s multi-tenant capabilities will allow content to be shared or isolated as needed.

As you roll out an omnichannel content hub, establish workflows and approval processes for content changes. This ensures that a change in content (which could affect many channels at once) is reviewed properly. dotCMS allows you to configure custom workflows with steps like review, approve, publish. Especially for large teams, this is a safety net so that your carefully modeled content isn't inadvertently altered and pushed everywhere without checks. It also helps assign responsibility (e.g., legal can approve terms & conditions content, whereas marketing can freely publish blog content).

When designing your content model in dotCMS, test it by retrieving content via the API and rendering it in different channel contexts. Build a simple prototype of a website page and a mobile screen (or whatever channels you plan) to see if the content model fits well. You might discover you need an extra field or a different structure. dotCMS’s content API and even its built-in presentation layer (if you choose to use it in hybrid mode) can be used to do these dry runs.

Technology is only part of the equation. Implementing content modeling for omnichannel success also requires strategy and expertise. Linearloop works on building modern digital platforms and has experience with headless CMS implementation, content strategy, and integrating systems like dotCMS with commerce and other services. Drawing on lessons from past projects can help you avoid common pitfalls and accelerate the adoption of an omnichannel content hub.